It seems everyone is interested in gathering usage data from MOSS, and everyone is unsatisfied with the simple reports that can be generated out-of-the-box. Yet oddly enough, I was unable to find much information at all about ETL and Reporting on this data. This is probably due to the fact that the log file format is a bit cryptic, and was entirely undocumented until recently.

What I’ve created here is an SQL Integration Services ETL Package that will enumerate the usage log files from disk, parse them, and load them to SQL. Then it will archive or delete the files. From there you can easily create SQL Reporting Services reports, cubes, etc to further analyze the data.

Here is the MSDN documentation on the Usage Event Logs file format:

http://msdn.microsoft.com/en-us/library/bb814929.aspx

Before we get too deep, take a look at my Visual Studio solution so you have some context:

You can see I’ve got the DTSX package, as well as a C# class library and a table creation script.

Because the files are in a binary format, you can’t parse them using a simple ‘flat file’ source in SSIS. Initially I hacked together a quick script source task which did a reasonable job, but then I found some nice C# code for parsing the files at William’s Blog.

I took William’s C# code, with very few changes, and created my class library to do the parsing. I will later reference this library from inside a script source task in SSIS to parse the log files. (Writing an external dll and referencing it in a script task is *much* faster than writing a custom source adapter for SSIS)

Let’s start at the beginning though, so here is what the main package loop looks like:

Very simple. The log files are created in a bit of a nested folder structure, with GUID’s and dates. They all have a .log extension (the same as other MOSS logs). The best thing to do is go to central admin and tell it to shove all the usage logs in a nice subfolder called ‘Usage’ so you can easily differentiate them from all the other log files. Then we just tell the enumerator to traverse sub folders and it will find all our files.

Each file that is found has it’s path stored in a variable, and the data flow task is called off.

When the data flow task completes, we run a file system task which archives the log files off elsewhere. (Or with a small change simply deletes them). All of the paths used here are stored in variables.

And the data flow task:

Once again, very simple. The script source task has the ‘meat and potatoes’ in it. It pushes out all the parsed data where we simply dump it into a SQL table. I take care of type casting in the script task so we don’t have to do it here. You could of course add some logic to truncate old records out of the table, etc.

So, on to the script task.

The outputs (and thus the columns available in the log files) for the script task are configured as follows:

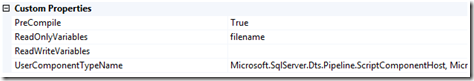

Also, we need to bring in our filename variable from the enumerator so we know which file to parse:

Next, we’ve got to get our custom DLL (from our class library project in the solution) somewhere that we can access it from our script task. You’ve got to copy the parser DLL to this folder:

C:Program FilesMicrosoft SQL Server90SDKAssemblies

Doing this makes the DLL available for reference from within your script task. Putting the DLL in the GAC won’t work.

Now you can add it as a reference, you can see "UsageLogParser" added to my script references here:

Here is the full code listing for the main subroutine in my script source task. This uses the parser class to generate a dataset, then enumerates the rows in the dataset and add’s rows to the source adapters outputs. The only thing missing from this code listing is a line in PreExecute to pull my filename variable into a local string (path). You could expand on this and trap errors, logging them to an error output.

|

1 |

<span class="kwrd">Public</span> <span class="kwrd">Overrides</span> <span class="kwrd">Sub</span> CreateNewOutputRows()<br /> <span class="kwrd">If</span> (System.IO.File.Exists(path)) <span class="kwrd">Then</span><br /><br /> <span class="kwrd">Dim</span> parser <span class="kwrd">As</span> <span class="kwrd">New</span> MOSS2007LogParser.Parser()<br /> <span class="kwrd">Dim</span> result <span class="kwrd">As</span> DataSet = parser.GetLogDataSet(path)<br /><br /> <span class="kwrd">For</span> <span class="kwrd">Each</span> row <span class="kwrd">As</span> System.Data.DataRow <span class="kwrd">In</span> result.Tables(0).Rows<br /> LogDataOutputBuffer.AddRow()<br /> LogDataOutputBuffer.id = <span class="kwrd">New</span> Guid(row(<span class="str">"SiteGUID"</span>).ToString())<br /> LogDataOutputBuffer.time = DateTime.Parse(row(<span class="str">"TimeStamp"</span>).ToString())<br /> LogDataOutputBuffer.url = row(<span class="str">"Document"</span>).ToString()<br /> LogDataOutputBuffer.sitecollection = row(<span class="str">"Web"</span>).ToString()<br /> LogDataOutputBuffer.user = row(<span class="str">"UserName"</span>).ToString()<br /> LogDataOutputBuffer.webapp = row(<span class="str">"SiteUrl"</span>).ToString()<br /> LogDataOutputBuffer.referral = row(<span class="str">"Referral"</span>).ToString()<br /> LogDataOutputBuffer.command = row(<span class="str">"Command"</span>).ToString()<br /> LogDataOutputBuffer.useragent = row(<span class="str">"UserAgent"</span>).ToString()<br /> LogDataOutputBuffer.querystring = row(<span class="str">"QueryString"</span>).ToString()<br /> <span class="kwrd">Next</span><br /><br /> result.Dispose()<br /><br /> <span class="kwrd">End</span> <span class="kwrd">If</span><br /> <span class="kwrd">End</span> Sub |

After that, we simply connect the output to our destination adapter. Here is what my destination table looks like. I’m just using the GUID as a primary key and bouncing any duplicates (not that there should be any if you archive/delete the logs). You can add smarter logic if you want to handle that differently. The sql script for the table is also in the solution.

|

1 |

<span class="kwrd">USE</span> [BGSD]<br /><span class="kwrd">GO</span><br /><span class="kwrd">SET</span> ANSI_NULLS <span class="kwrd">ON</span><br /><span class="kwrd">GO</span><br /><span class="kwrd">SET</span> QUOTED_IDENTIFIER <span class="kwrd">ON</span><br /><span class="kwrd">GO</span><br /><span class="kwrd">SET</span> ANSI_PADDING <span class="kwrd">ON</span><br /><span class="kwrd">GO</span><br /><span class="kwrd">CREATE</span> <span class="kwrd">TABLE</span> [dbo].[UsageLog](<br /> [id] [uniqueidentifier] <span class="kwrd">NULL</span>,<br /> [<span class="kwrd">time</span>] [datetime] <span class="kwrd">NULL</span>,<br /> [webapp] [<span class="kwrd">varchar</span>](50) <span class="kwrd">NULL</span>,<br /> [sitecollection] [<span class="kwrd">varchar</span>](50) <span class="kwrd">NULL</span>,<br /> [url] [<span class="kwrd">varchar</span>](100) <span class="kwrd">NULL</span>,<br /> [<span class="kwrd">user</span>] [<span class="kwrd">varchar</span>](50) <span class="kwrd">NULL</span>,<br /> [referral] [<span class="kwrd">varchar</span>](150) <span class="kwrd">NULL</span>,<br /> [command] [<span class="kwrd">varchar</span>](100) <span class="kwrd">NULL</span>,<br /> [useragent] [<span class="kwrd">varchar</span>](150) <span class="kwrd">NULL</span>,<br /> [querystring] [<span class="kwrd">varchar</span>](150) <span class="kwrd">NULL</span><br />) <span class="kwrd">ON</span> [<span class="kwrd">PRIMARY</span>]<br /><br /><span class="kwrd">GO</span><br /><span class="kwrd">SET</span> ANSI_PADDING OFF |

A few additional notes:

- You need to enable both usage logging, and usage log processing in Central Administration. You should make a new folder to save these logs to so that your package can tell them apart from other .log files.

- You do not need to enable Usage report generation in your site collections.

- You should schedule your package to run outside of the time slot for usage processing that you configured in central administration. This way the log files are not in use while you load them, and you don’t have to worry about remembering where you left off, etc.

- There is some performance impact to usage logging. If you have previously had this disabled, please pay close attention to any adverse effects.

Download the Visual Studio Solution and DTSX package here. This was created using Visual Studio 2005, and SQL Server Integration Services 2005.